The documented and recommended way to reference a custom assembly from an SSIS Script Task or Script Component is to install it in the Global Assembly Cache (GAC). However this is not always possible or simple to do.

Here’s a simple workaround for loading an assembly from an arbitrary location. The idea is to register an AppDomain.AssemblyResolve event handler.

The .NET Framework provides the AppDomain.AssemblyResolve event for applications that require greater control over assembly loading. By handling this event, your application can load an assembly into the load context from outside the normal probing paths, select which of several assembly versions to load, emit a dynamic assembly and return it, and so on. This topic provides guidance for handling the AssemblyResolve event.

Which does just what we need. The question is where and how to wire it up. The trick is to realize that .NET’s Just-in-Time (JIT) compilation is responsible for loading dependent assemblies, and therefore guarantees that the assemblies referenced in a type’s method won’t be loaded until just before the method is executed. This means that in the type’s static constructor we can reliably wire up the AssemblyResolve event that will supply the referenced types.

When you create a SSIS Script Task or Script Component SSIS generates a class for you called ScriptMain, and you can wire-up the event in a Static Constructor for that type. A Static Constructor is guaranteed to be called exactly once “before the first instance is created or any static members are referenced.”, so therefore before the dependent assemblies are loaded.

[Microsoft.SqlServer.Dts.Tasks.ScriptTask.SSISScriptTaskEntryPointAttribute]

public partial class ScriptMain : Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

{

static ScriptMain()

{

AppDomain.CurrentDomain.AssemblyResolve += new ResolveEventHandler(CurrentDomain_AssemblyResolve);

}

static System.Reflection.Assembly CurrentDomain_AssemblyResolve(object sender, ResolveEventArgs args)

{

if (args.Name.Contains("ssisHelper"))

{

string path = @"c:\temp\";

return System.Reflection.Assembly.LoadFile(System.IO.Path.Combine(path, "ssisHelper.dll"));

}

return null;

}

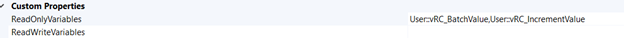

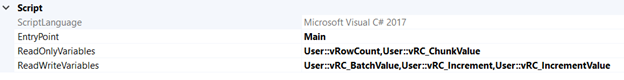

. . .You can then drop the referenced assemblies in a well-known location on the SSIS server, or use a package variable to refer to the location and pass that in to the Script Task or Script Component.

If you are having issues with getting the dll path from a variable, Here is the work around. Basically you need to remove the “static” qualifier from the constructor and the event handler declarations and just make them public. So: public static ScriptMain() becomes public ScriptMain() and public static Assembly CurrentDomain_AssemblyResolve(object sender, ResolveEventArgs args) becomes public Assembly CurrentDomain_AssemblyResolve(object sender, ResolveEventArgs args).

Here is the modified version;

[Microsoft.SqlServer.Dts.Tasks.ScriptTask.SSISScriptTaskEntryPointAttribute]

public partial class ScriptMain : Microsoft.SqlServer.Dts.Tasks.ScriptTask.VSTARTScriptObjectModelBase

{

//the assemblyl is not registered in GAC and referenced from a folder.

public ScriptMain()

{

AppDomain.CurrentDomain.AssemblyResolve += new ResolveEventHandler(CurrentDomain_AssemblyReference);

}

public System.Reflection.Assembly CurrentDomain_AssemblyReference(object sender, ResolveEventArgs args)

{

//string path = @"C:\Dev\PathToSSISHelper\";

string path = Dts.Variables["User::PathToSSISHelper"].Value.ToString();

if (args.Name.Contains("SSISHelper"))

{

return System.Reflection.Assembly.LoadFrom(System.IO.Path.Combine(path, "SSISHelper.dll"));

}

return null;

}

//end of reflection

.....

}

If you try to read the assemblies from a network share, you might get (System.NotSupportedException). Therefore replaced the LoadFile(path) with the UnsafeLoadFrom(path) call as workaround. Please use it only for your own or other wellknown assemblies, not downloaded assemblies from unknown authors, because this would be a security issue.

Here is the working code, the referenced DLL is “System.Web.Helpers.dll” and the network share path gets configured in the user variable “LibPath” (VS 2015,SQL Server 2017):

public System.Reflection.Assembly CurrentDomain_AssemblyResolve(object sender, ResolveEventArgs args)

{

string path = Variables.LibPath.ToString();

if (args.Name.Contains("System.Web.Helpers"))

{

return System.Reflection.Assembly.UnsafeLoadFrom(System.IO.Path.Combine(path, "System.Web.Helpers.dll"));

}

return null;

}

/// <summary>

/// This method is called once, before rows begin to be processed in the data flow.

/// </summary>

public override void PreExecute()

{

base.PreExecute();

AppDomain.CurrentDomain.AssemblyResolve += new ResolveEventHandler(CurrentDomain_AssemblyResolve);

}Resource