Modern home labs and small hosting environments often grow organically. New services are added over time, ports multiply, and TLS certificates become difficult to manage. Eventually, what started as a simple setup becomes hard to secure and maintain.

Over the last few years, I gradually evolved my lab environment into a structure that separates workloads, automates TLS, and simplifies routing using Traefik as a reverse proxy.

This article summarizes the architecture and lessons learned from running multiple Traefik instances across segmented networks with automated TLS certificates.

The Initial Problem

Typical home lab setups look like this:

service1 → host:9000

service2 → host:9443

service3 → host:8123

service4 → host:8080

Problems quickly appear:

- Too many ports exposed

- TLS certificates become manual work

- Hard to secure services individually

- Debugging routing becomes messy

- Services mix across trust levels

As services increase, maintenance becomes harder.

Design Goals

The environment was redesigned around a few simple goals:

- One secure entry point for services

- Automatic TLS certificate management

- Network segmentation between service types

- Clean domain naming

- Failure isolation between environments

- Minimal ongoing maintenance

High-Level Architecture

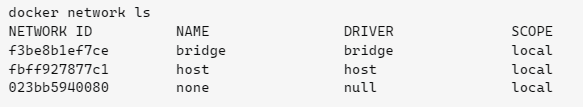

The resulting architecture separates services using VLANs and domain zones.

Internet

↓

DNS

↓

Traefik Reverse Proxy Instances

↓

Segmented Service Networks

Workloads are separated by purpose and risk profile.

Example:

Secure VLAN → internal services

IoT VLAN → containers and test services

Application VLAN → development workloads

Each network segment runs its own services and routing.

Role of Traefik

Traefik serves as the gateway for services by handling:

- HTTPS certificates (Let’s Encrypt)

- Reverse proxy routing

- Automatic service discovery

- HTTPS redirects

- Security headers

Instead of accessing services by ports, everything is exposed through HTTPS:

https://sonarqube.example.com

https://portainer.example.com

https://grafana.example.com

Traefik routes traffic internally to the correct service.

TLS Strategy: Subdomain Separation

Instead of creating individual certificates per service, services are grouped by domain zones.

Example zones:

*.dk.example.com

*.pbi.example.com

*.ad.example.com

Each zone receives a wildcard certificate.

Example services:

sonarqube.dk.example.com

traefik.dk.example.com

grafana.dk.example.com

Benefits:

- One certificate covers many services

- Renewal complexity drops

- Let’s Encrypt rate limits avoided

- Services can be added freely

- Routing stays simple

Each Traefik instance manages certificates for its own domain zone.

Why Multiple Traefik Instances?

Rather than centralizing everything, multiple Traefik gateways are used.

Example:

- Unraid services handled by one proxy

- Docker services handled by another

- Podman workloads handled separately

Benefits:

- Failure isolation

- Independent upgrades

- Easier experimentation

- Reduced blast radius during misconfiguration

If one gateway fails, others continue operating.

Operational Benefits Observed

After stabilizing this architecture:

Certificate renewal became automatic

No manual certificate maintenance required.

Service expansion became simple

New services only need routing rules.

Network isolation improved safety

IoT workloads cannot easily reach secure services.

Troubleshooting became easier

Common issues reduce to:

404 → router mismatch

502 → backend unreachable

TLS error → DNS or certificate issue

Lessons Learned

Several practical lessons emerged.

Use container names instead of IPs

Docker DNS is more stable than static IP references.

Keep services on shared networks

Ensures routing remains predictable.

Remove unnecessary exposed ports

Let Traefik handle public access.

Back up certificate storage

Losing certificate storage can trigger renewal rate limits.

Avoid unnecessary upgrades

Infrastructure components should change slowly.

Is This Overkill for a Home Lab?

Not necessarily.

As soon as you host multiple services, segmentation and automated TLS reduce maintenance effort and improve reliability.

Even small environments benefit from:

- consistent routing

- secure entry points

- simplified service management

Final Thoughts

Traefik combined with VLAN segmentation and TLS subdomain zoning has provided a stable and low-maintenance solution for managing multiple services.

The environment now:

- renews certificates automatically

- isolates workloads

- simplifies routing

- scales easily

- requires minimal manual intervention

What started as experimentation evolved into a practical architecture pattern that now runs quietly in the background.

And in infrastructure, quiet is success.